Nebius in dstack Sky GPU marketplace, with production-ready GPU clusters¶

dstack is an open-source control plane for orchestrating GPU workloads. It can provision cloud VMs, run on top of Kubernetes, or manage on-prem clusters. If you don’t want to self-host, you can use dstack Sky, the managed version of dstack that also provides access to cloud GPUs via its markfetplace.

With our latest release, we’re excited to announce that Nebius, a purpose-built AI cloud for large scale training and inference, has joined the dstack Sky marketplace

to offer on-demand and spot GPUs, including clusters.

Last week we published the state of cloud GPU, a study of the GPU market. As noted there, Nebius is one of the few purpose-built AI clouds delivering performant and resilient GPUs at scale — available on-demand, as spot instances, and as full clusters.

Nebius designs and operates its own GPU servers in energy-efficient data centers, giving full control over quality, performance tuning, and delivery timelines. Every cluster undergoes a three-stage validation — hardware burn-in, reference architecture checks, and long-haul stress tests — ensuring production-ready infrastructure with consistent performance for large-scale AI training.

Since early this year, the open-source dstack has supported Nebius, making it easy to manage clusters and orchestrate compute cost-effectively.

About dstack Sky¶

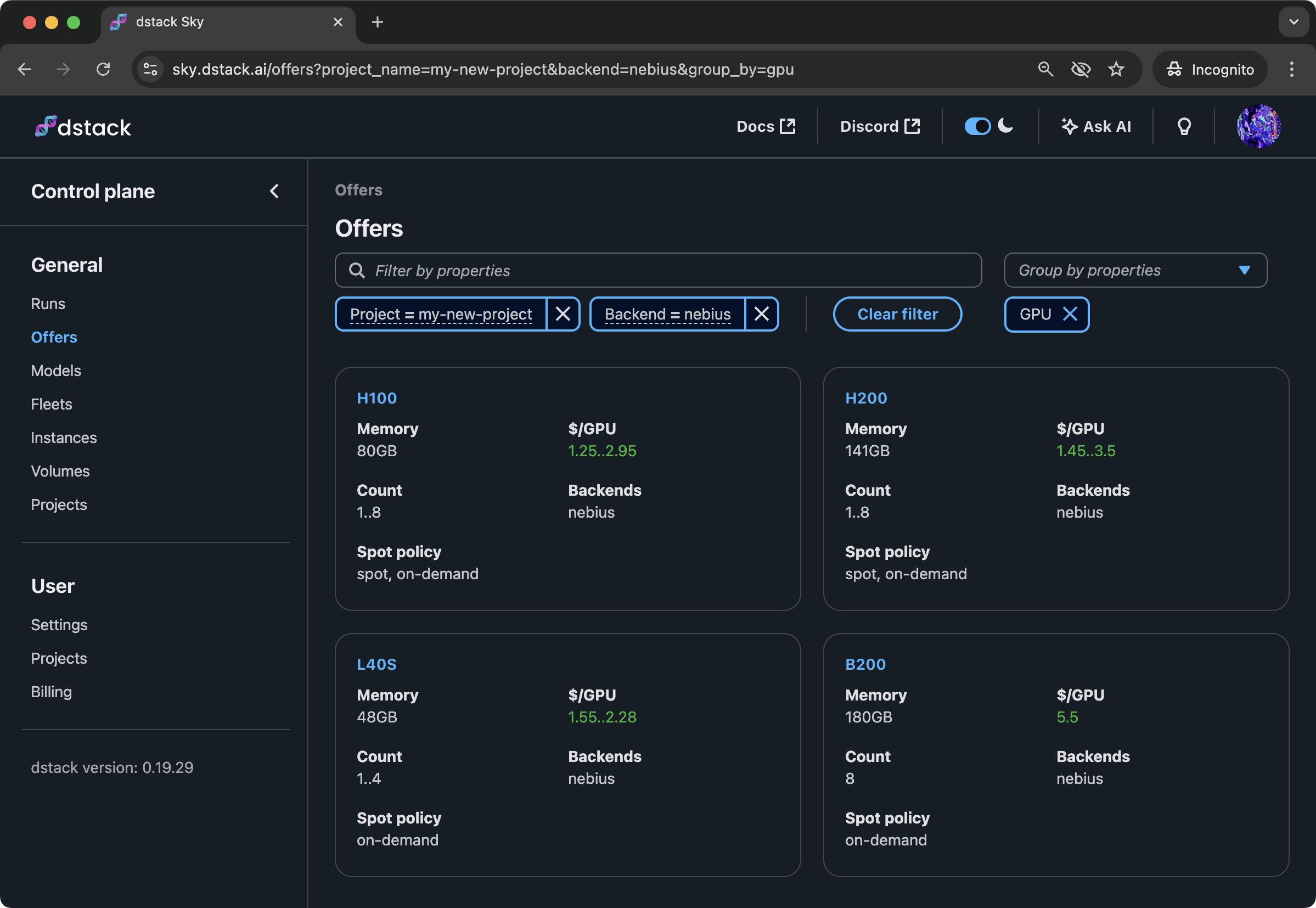

With this week's release, Nebius officially joins dstack Sky. Nebius can now be used not only with your own account, but also directly via the GPU marketplace.

The marketplace lets you access Nebius GPUs without having a Nebius account. You can pay through dstack Sky, and switch to your own Nebius account anytime with just a few clicks.

While the open-source version of dstack has supported Nebius clusters from day one,

Nebius is the first provider to bring on-demand and spot GPU clusters to dstack Sky.

With Nebius, dstack Sky users can orchestrate NVIDIA GPUs provisioned in hours, with optimized InfiniBand networking to minimize bottlenecks, non-virtualized GPUs for predictable throughput, and industry-leading MTBF/MTTR proven on multi-thousand-GPU clusters.

Getting started¶

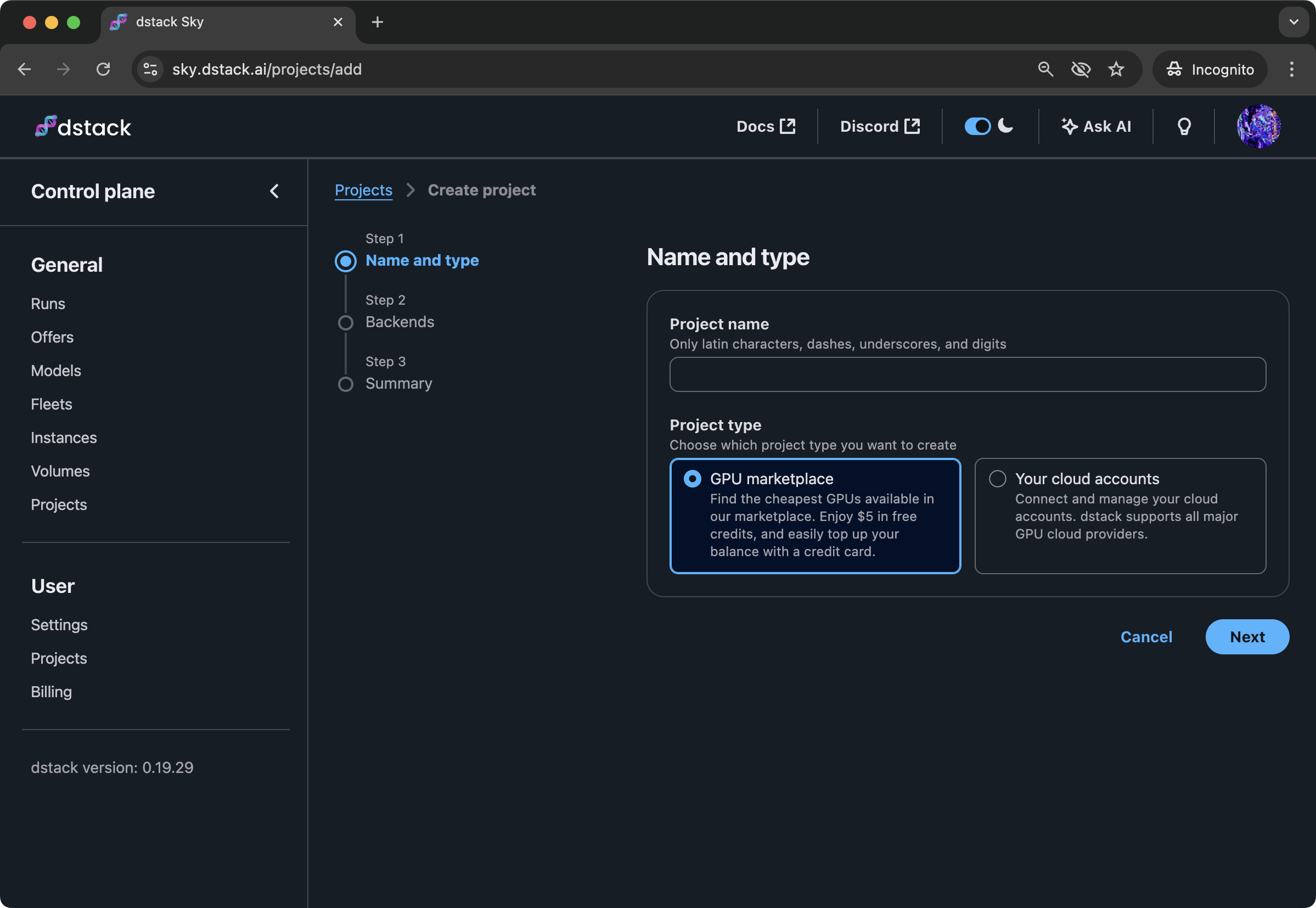

After you sign up with dstack Sky,

you’ll be prompted to create a project and choose between the GPU marketplace or your own cloud account:

Once the project is created, install the dstack CLI:

$ uv tool install dstack -U

$ pip install dstack -U

Now you can define dev environments,

tasks, services,

and fleets, then apply them with dstack apply.

dstack provisions cloud VMs, sets up environments, orchestrates runs, and handles everything required for development, training, or deployment.

To create a Nebius cluster, for example for distributed training, define the following fleet configuration:

type: fleet

name: my-cluster

placement: cluster

nodes: 2

backends: [nebius]

resources:

gpu: H100:8

Then, create it via dstack apply:

$ dstack apply -f my-cluster.dstack.yml

Once the fleet is ready, you can run distributed tasks.

dstack automatically configures drivers, networking, and fast GPU-to-GPU interconnect.

To learn more, see the clusters guide.

With Nebius joining dstack Sky, users can now run on-demand and spot GPUs and clusters directly through the marketplace—gaining access to the same production grade infrastrucure Nebius customers use for frontier-scale training, without needing a separate Nebius account.

If you prefer to go self-hosted, you can always switch to the open-source version of

dstack, bringing the same functionality.

Our goal is to give teams maximum flexibility while removing the complexity of managing infrastructure. More updates are coming soon.

How does dstack compare to Kubernetes?

dstack can run either on top of Kubernetes or directly on cloud VMs.

In both cases, you don’t need to manage Kubernetes yourself — dstack handles container and GPU orchestration,

providing a simple, multi-cloud interface for development, training, and inference.

What's next

- Sign up with dstack Sky

- Check Quickstart

- Learn more about Nebius

- Explore dev environments, tasks, services, and fleets

- Reaad the the clusters guide