Define a configuration

Create the following run configuration inside your project folder:

```yaml

type: task

name: streamlit

# If `image` is not specified, dstack uses its default image

python: "3.11"

#image: dstackai/base:py3.13-0.7-cuda-12.1

# Commands of the task

commands:

- pip install streamlit

- streamlit hello

# Ports to forward

ports:

- 8501

# Uncomment to request resources

#resources:

# gpu: 24GB

```

By default, tasks run on a single instance. To run a distributed task, specify

[`nodes`](concepts/tasks.md#distributed-tasks), and `dstack` will run it on a cluster.

Apply the configuration

Run the configuration via [`dstack apply`](reference/cli/dstack/apply.md):

```shell

$ dstack apply -f task.dstack.yml

# BACKEND REGION RESOURCES SPOT PRICE

1 gcp us-west4 2xCPU, 8GB, 100GB (disk) yes $0.010052

2 azure westeurope 2xCPU, 8GB, 100GB (disk) yes $0.0132

3 gcp europe-central2 2xCPU, 8GB, 100GB (disk) yes $0.013248

Submit the run streamlit? [y/n]: y

Provisioning `streamlit`...

---> 100%

Welcome to Streamlit. Check out our demo in your browser.

Local URL: http://localhost:8501

```

If you specified `ports`, they will be automatically forwarded to `localhost` for convenient access.

=== "Service"

A [service](concepts/services.md) allows you to deploy a model or any web app as an endpoint.

Define a configuration

Create the following run configuration inside your project folder:

```yaml

type: service

name: llama31-service

# If `image` is not specified, dstack uses its default image

python: "3.11"

#image: dstackai/base:py3.13-0.7-cuda-12.1

# Required environment variables

env:

- HF_TOKEN

commands:

- pip install vllm

- vllm serve meta-llama/Meta-Llama-3.1-8B-Instruct --max-model-len 4096

# Expose the vllm server port

port: 8000

# Specify a name if it's an OpenAI-compatible model

model: meta-llama/Meta-Llama-3.1-8B-Instruct

# Required resources

resources:

gpu: 24GB

```

Apply the configuration

Run the configuration via [`dstack apply`](reference/cli/dstack/apply.md):

```shell

$ HF_TOKEN=...

$ dstack apply -f service.dstack.yml

# BACKEND REGION INSTANCE RESOURCES SPOT PRICE

1 aws us-west-2 g5.4xlarge 16xCPU, 64GB, 1xA10G (24GB) yes $0.22

2 aws us-east-2 g6.xlarge 4xCPU, 16GB, 1xL4 (24GB) yes $0.27

3 gcp us-west1 g2-standard-4 4xCPU, 16GB, 1xL4 (24GB) yes $0.27

Submit the run llama31-service? [y/n]: y

Provisioning `llama31-service`...

---> 100%

Service is published at:

http://localhost:3000/proxy/services/main/llama31-service/

Model meta-llama/Meta-Llama-3.1-8B-Instruct is published at:

http://localhost:3000/proxy/models/main/

```

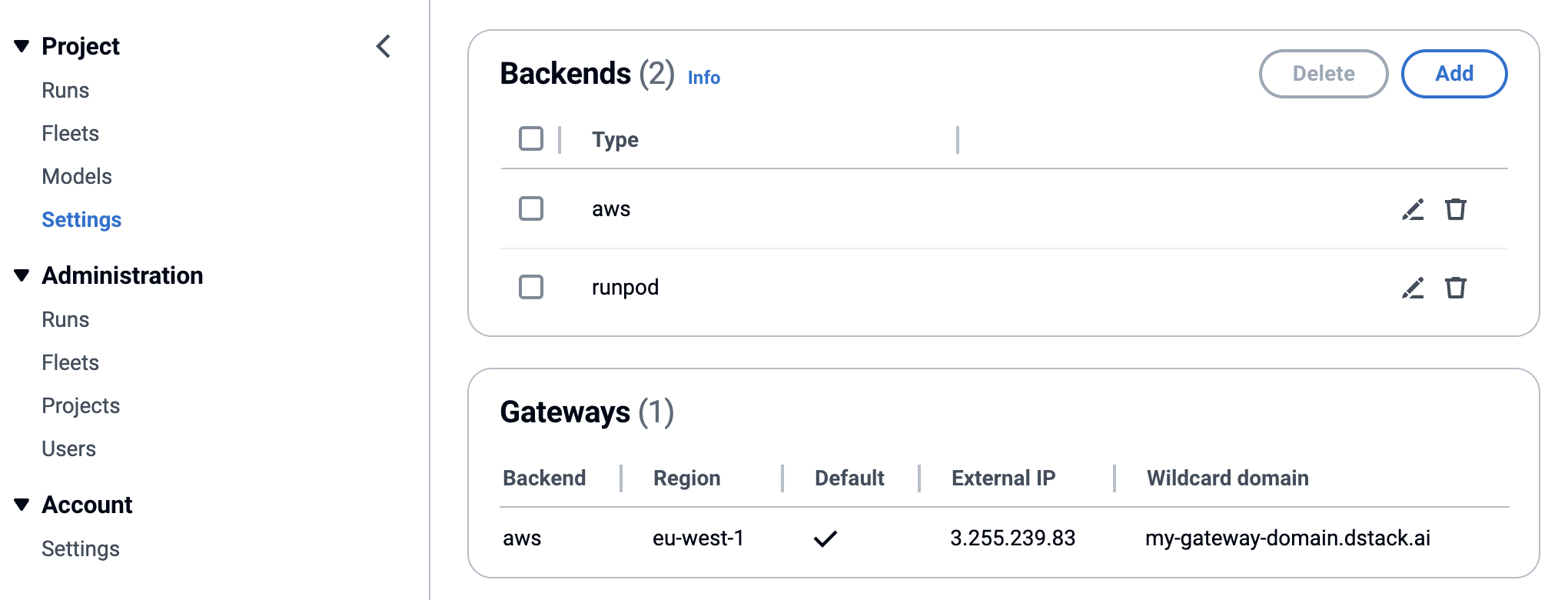

!!! info "Gateway"

To enable [auto-scaling](concepts/services.md#replicas-and-scaling),

[rate limits](concepts/services.md#rate-limits),

or use a custom domain with HTTPS,

set up a [gateway](concepts/gateways.md) before running the service.

If you're using [dstack Sky :material-arrow-top-right-thin:{ .external }](https://sky.dstack.ai){:target="_blank"},

a gateway is pre-configured for you.

`dstack apply` automatically provisions instances and runs the workload according to the configuration.

## Troubleshooting

Something not working? See the [troubleshooting](guides/troubleshooting.md) guide.

!!! info "What's next?"

1. Read about [backends](concepts/backends.md), [dev environments](concepts/dev-environments.md), [tasks](concepts/tasks.md), [services](concepts/services.md), and [fleets](concepts/services.md)

2. Browse [examples](../examples.md)

3. Join [Discord :material-arrow-top-right-thin:{ .external }](https://discord.gg/u8SmfwPpMd)

# Concepts

## Backends

# Backends

Backends allow `dstack` to manage compute across various environments.

They can be configured via `~/.dstack/server/config.yml` or through the [project settings page](../concepts/projects.md#backends) in the UI.

`dstack` supports three types of backends:

* [VM-based](#vm-based) – use `dstack`'s native integration with cloud providers to provision VMs, manage clusters, and orchestrate container-based runs.

* [Container-based](#container-based) – use either `dstack`'s native integration with cloud providers or Kubernetes to orchestrate container-based runs; provisioning in this case is delegated to the cloud provider or Kubernetes.

* [On-prem](#on-prem) – use `dstack`'s native support for on-prem servers without needing Kubernetes.

!!! info "dstack Sky"

If you're using [dstack Sky :material-arrow-top-right-thin:{ .external }](https://sky.dstack.ai){:target="_blank"}, backend configuration is optional. dstack Sky lets you use pre-configured backends to access GPU marketplace.

See the examples of backend configuration below.

## VM-based

VM-based backends allow `dstack` users to manage clusters and orchestrate container-based runs across a wide range of cloud providers.

Under the hood, `dstack` uses native integrations with these providers to provision clusters on demand.

Compared to [container-based](#container-based) backends, this approach offers finer-grained, simpler control over cluster provisioning and eliminates the dependency on a Kubernetes layer.

### AWS

There are two ways to configure AWS: using an access key or using the default credentials.

=== "Default credentials"

If you have default credentials set up (e.g. in `~/.aws/credentials`), configure the backend like this:

```yaml

projects:

- name: main

backends:

- type: aws

creds:

type: default

```

=== "Access key"

Create an access key by following the [this guide :material-arrow-top-right-thin:{ .external }](https://docs.aws.amazon.com/cli/latest/userguide/cli-authentication-user.html#cli-authentication-user-get).

Once you've downloaded the `.csv` file with your IAM user's Access key ID and Secret access key, proceed to

configure the backend.

```yaml

projects:

- name: main

backends:

- type: aws

creds:

type: access_key

access_key: KKAAUKLIZ5EHKICAOASV

secret_key: pn158lMqSBJiySwpQ9ubwmI6VUU3/W2fdJdFwfgO

```

??? info "Required permissions"

The following AWS policy permissions are sufficient for `dstack` to work:

```

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:AttachVolume",

"ec2:AuthorizeSecurityGroupEgress",

"ec2:AuthorizeSecurityGroupIngress",

"ec2:CreatePlacementGroup",

"ec2:CancelSpotInstanceRequests",

"ec2:CreateSecurityGroup",

"ec2:CreateTags",

"ec2:CreateVolume",

"ec2:DeletePlacementGroup",

"ec2:DeleteVolume",

"ec2:DescribeAvailabilityZones",

"ec2:DescribeCapacityReservations"

"ec2:DescribeImages",

"ec2:DescribeInstances",

"ec2:DescribeInstanceAttribute",

"ec2:DescribeInstanceTypes",

"ec2:DescribeRouteTables",

"ec2:DescribeSecurityGroups",

"ec2:DescribeSubnets",

"ec2:DescribeVpcs",

"ec2:DescribeVolumes",

"ec2:DetachVolume",

"ec2:RunInstances",

"ec2:TerminateInstances"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"servicequotas:ListServiceQuotas",

"servicequotas:GetServiceQuota"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"elasticloadbalancing:CreateLoadBalancer",

"elasticloadbalancing:CreateTargetGroup",

"elasticloadbalancing:CreateListener",

"elasticloadbalancing:RegisterTargets",

"elasticloadbalancing:AddTags",

"elasticloadbalancing:DeleteLoadBalancer",

"elasticloadbalancing:DeleteTargetGroup",

"elasticloadbalancing:DeleteListener",

"elasticloadbalancing:DeregisterTargets"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"acm:DescribeCertificate",

"acm:ListCertificates"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:GetInstanceProfile",

"iam:GetRole",

"iam:PassRole"

],

"Resource": "*"

}

]

}

```

The `elasticloadbalancing:*` and `acm:*` permissions are only needed for provisioning gateways with ACM (AWS Certificate Manager) certificates.

The `iam:*` permissions are only needed if you specify `iam_instance_profile` to assign to EC2 instances.

You can also limit permissions to specific resources in your account:

```

{

"Version": "2012-10-17",

"Statement": [

...

{

"Effect": "Allow",

"Action": [

"iam:GetInstanceProfile",

"iam:GetRole",

"iam:PassRole"

],

"Resource": "arn:aws:iam::account-id:role/EC2-roles-for-XYZ-*"

}

]

}

```

??? info "VPC"

By default, `dstack` uses the default VPC. It's possible to customize it:

=== "vpc_name"

```yaml

projects:

- name: main

backends:

- type: aws

creds:

type: default

vpc_name: my-vpc

```

=== "vpc_ids"

```yaml

projects:

- name: main

backends:

- type: aws

creds:

type: default

default_vpcs: true

vpc_ids:

us-east-1: vpc-0a2b3c4d5e6f7g8h

us-east-2: vpc-9i8h7g6f5e4d3c2b

us-west-1: vpc-4d3c2b1a0f9e8d7

```

For the regions without configured `vpc_ids`, enable default VPCs by setting `default_vpcs` to `true`.

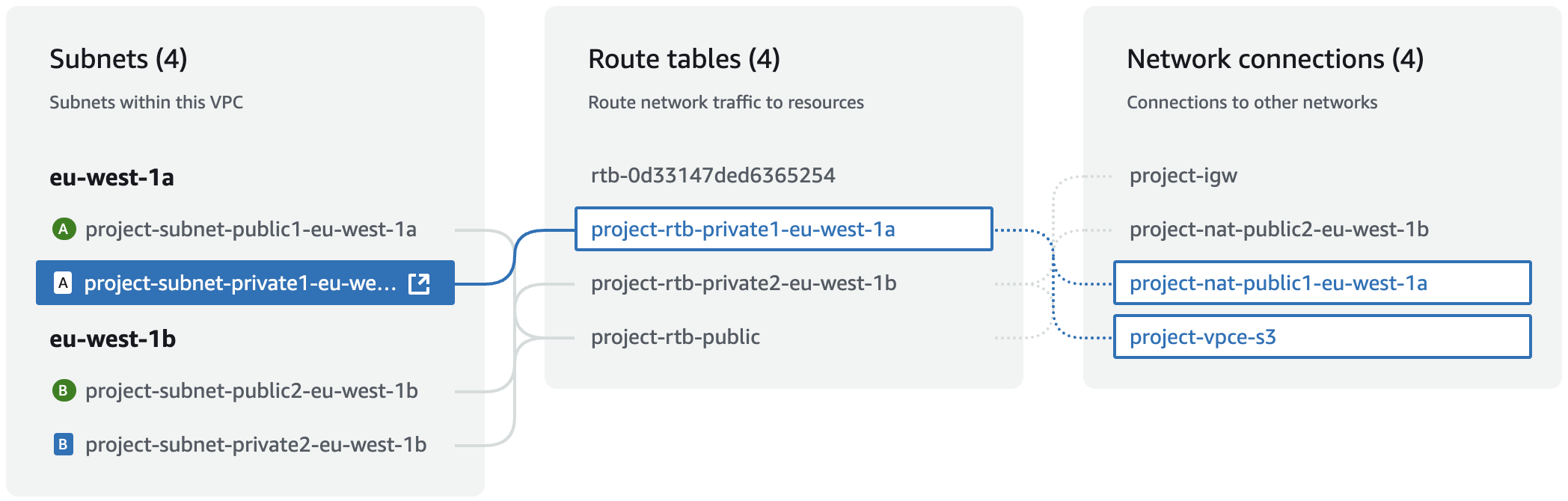

??? info "Private subnets"

By default, `dstack` provisions instances with public IPs and permits inbound SSH traffic.

If you want `dstack` to use private subnets and provision instances without public IPs, set `public_ips` to `false`.

```yaml

projects:

- name: main

backends:

- type: aws

creds:

type: default

public_ips: false

```

Using private subnets assumes that both the `dstack` server and users can access the configured VPC's private subnets.

Additionally, private subnets must have outbound internet connectivity provided by NAT Gateway, Transit Gateway, or other mechanism.

??? info "OS images"

By default, `dstack` uses its own [AMI :material-arrow-top-right-thin:{ .external }](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AMIs.html)

optimized for `dstack`.

To use your own or other third-party images, set the `os_images` property:

```yaml

projects:

- name: main

backends:

- type: aws

creds:

type: default

os_images:

cpu:

name: my-ami-for-cpu-instances

owner: self

user: dstack

nvidia:

name: 'Some ThirdParty CUDA image'

owner: 123456789012

user: ubuntu

```

Here, both `cpu` and `nvidia` properties are optional, but if the property is not set, you won´t be able to use the corresponding instance types.

The `name` is an AMI name.

The `owner` is either an AWS account ID (a 12-digit number) or a special value `self` indicating the current account.

The `user` specifies an OS user for instance provisioning.

!!! info "Image requirements"

* SSH server listening on port 22

* `user` with passwordless sudo access

* Docker is installed

* (For NVIDIA instances) NVIDIA/CUDA drivers and NVIDIA Container Toolkit are installed

* The firewall (`iptables`, `ufw`, etc.) must allow external traffic to port 22 and all traffic within the private subnet, and should forbid any other incoming external traffic.

### Azure

There are two ways to configure Azure: using a client secret or using the default credentials.

=== "Default credentials"

If you have default credentials set up, configure the backend like this:

```yaml

projects:

- name: main

backends:

- type: azure

subscription_id: 06c82ce3-28ff-4285-a146-c5e981a9d808

tenant_id: f84a7584-88e4-4fd2-8e97-623f0a715ee1

creds:

type: default

```

If you don't know your `subscription_id` and `tenant_id`, use [Azure CLI :material-arrow-top-right-thin:{ .external }](https://learn.microsoft.com/en-us/cli/azure/install-azure-cli):

```shell

az account show --query "{subscription_id: id, tenant_id: tenantId}"

```

=== "Client secret"

A client secret can be created using the [Azure CLI :material-arrow-top-right-thin:{ .external }](https://learn.microsoft.com/en-us/cli/azure/install-azure-cli):

```shell

SUBSCRIPTION_ID=...

az ad sp create-for-rbac

--name dstack-app \

--role $DSTACK_ROLE \

--scopes /subscriptions/$SUBSCRIPTION_ID \

--query "{ tenant_id: tenant, client_id: appId, client_secret: password }"

```

Once you have `tenant_id`, `client_id`, and `client_secret`, go ahead and configure the backend.

```yaml

projects:

- name: main

backends:

- type: azure

subscription_id: 06c82ce3-28ff-4285-a146-c5e981a9d808

tenant_id: f84a7584-88e4-4fd2-8e97-623f0a715ee1

creds:

type: client

client_id: acf3f73a-597b-46b6-98d9-748d75018ed0

client_secret: 1Kb8Q~o3Q2hdEvrul9yaj5DJDFkuL3RG7lger2VQ

```

If you don't know your `subscription_id`, use [Azure CLI :material-arrow-top-right-thin:{ .external }](https://learn.microsoft.com/en-us/cli/azure/install-azure-cli):

```shell

az account show --query "{subscription_id: id}"

```

??? info "Required permissions"

The following Azure permissions are sufficient for `dstack` to work:

```json

{

"properties": {

"roleName": "dstack-role",

"description": "Minimal required permissions for using Azure with dstack",

"assignableScopes": [

"/subscriptions/${YOUR_SUBSCRIPTION_ID}"

],

"permissions": [

{

"actions": [

"Microsoft.Authorization/*/read",

"Microsoft.Compute/availabilitySets/*",

"Microsoft.Compute/locations/*",

"Microsoft.Compute/virtualMachines/*",

"Microsoft.Compute/virtualMachineScaleSets/*",

"Microsoft.Compute/cloudServices/*",

"Microsoft.Compute/disks/write",

"Microsoft.Compute/disks/read",

"Microsoft.Compute/disks/delete",

"Microsoft.ManagedIdentity/userAssignedIdentities/assign/action",

"Microsoft.ManagedIdentity/userAssignedIdentities/read",

"Microsoft.Network/networkSecurityGroups/*",

"Microsoft.Network/locations/*",

"Microsoft.Network/virtualNetworks/*",

"Microsoft.Network/networkInterfaces/*",

"Microsoft.Network/publicIPAddresses/*",

"Microsoft.Resources/subscriptions/resourceGroups/read",

"Microsoft.Resources/subscriptions/resourceGroups/write",

"Microsoft.Resources/subscriptions/read"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

}

```

The `"Microsoft.Resources/subscriptions/resourceGroups/write"` permission is not required

if [`resource_group`](/docs/reference/server/config.yml/#azure) is specified.

??? info "VPC"

By default, `dstack` creates new Azure networks and subnets for every configured region.

It's possible to use custom networks by specifying `vpc_ids`:

```yaml

projects:

- name: main

backends:

- type: azure

creds:

type: default

regions: [westeurope]

vpc_ids:

westeurope: myNetworkResourceGroup/myNetworkName

```

??? info "Private subnets"

By default, `dstack` provisions instances with public IPs and permits inbound SSH traffic.

If you want `dstack` to use private subnets and provision instances without public IPs,

specify custom networks using `vpc_ids` and set `public_ips` to `false`.

```yaml

projects:

- name: main

backends:

- type: azure

creds:

type: default

regions: [westeurope]

vpc_ids:

westeurope: myNetworkResourceGroup/myNetworkName

public_ips: false

```

Using private subnets assumes that both the `dstack` server and users can access the configured VPC's private subnets.

Additionally, private subnets must have outbound internet connectivity provided by [NAT Gateway or other mechanism](https://learn.microsoft.com/en-us/azure/nat-gateway/nat-overview).

### GCP

There are two ways to configure GCP: using a service account or using the default credentials.

=== "Default credentials"

Enable GCP application default credentials:

```shell

gcloud auth application-default login

```

Then configure the backend like this:

```yaml

projects:

- name: main

backends:

- type: gcp

project_id: gcp-project-id

creds:

type: default

```

=== "Service account"

To create a service account, follow [this guide :material-arrow-top-right-thin:{ .external }](https://cloud.google.com/iam/docs/service-accounts-create). After setting up the service account [create a key :material-arrow-top-right-thin:{ .external }](https://cloud.google.com/iam/docs/keys-create-delete) for it and download the corresponding JSON file.

Then go ahead and configure the backend by specifying the downloaded file path.

```yaml

projects:

- name: main

backends:

- type: gcp

project_id: my-gcp-project

creds:

type: service_account

filename: ~/.dstack/server/gcp-024ed630eab5.json

```

??? info "User interface"

If you are configuring the `gcp` backend on the [project settigns page](projects.md#backends),

specify the contents of the JSON file in `data`:

```yaml

type: gcp

project_id: my-gcp-project

creds:

type: service_account

data: |

{

"type": "service_account",

"project_id": "my-gcp-project",

"private_key_id": "abcd1234efgh5678ijkl9012mnop3456qrst7890",

"private_key": "-----BEGIN PRIVATE KEY-----\nMIIEv...rest_of_key...IDAQAB\n-----END PRIVATE KEY-----\n",

"client_email": "my-service-account@my-gcp-project.iam.gserviceaccount.com",

"client_id": "123456789012345678901",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/my-service-account%40my-gcp-project.iam.gserviceaccount.com",

"universe_domain": "googleapis.com"

}

```

If you don't know your GCP project ID, use [Google Cloud CLI :material-arrow-top-right-thin:{ .external }](https://cloud.google.com/sdk/docs/install-sdk):

```shell

gcloud projects list --format="json(projectId)"

```

??? info "Required permissions"

The following GCP permissions are sufficient for `dstack` to work:

```

compute.disks.create

compute.disks.delete

compute.disks.get

compute.disks.list

compute.disks.setLabels

compute.disks.use

compute.firewalls.create

compute.images.useReadOnly

compute.instances.attachDisk

compute.instances.create

compute.instances.delete

compute.instances.detachDisk

compute.instances.get

compute.instances.setLabels

compute.instances.setMetadata

compute.instances.setServiceAccount

compute.instances.setTags

compute.networks.get

compute.networks.updatePolicy

compute.regions.get

compute.regions.list

compute.reservations.list

compute.resourcePolicies.create

compute.resourcePolicies.delete

compute.routers.list

compute.subnetworks.list

compute.subnetworks.use

compute.subnetworks.useExternalIp

compute.zoneOperations.get

```

If you plan to use TPUs, additional permissions are required:

```

tpu.nodes.create

tpu.nodes.get

tpu.nodes.update

tpu.nodes.delete

tpu.operations.get

tpu.operations.list

```

Also, the use of TPUs requires the `serviceAccountUser` role.

For TPU VMs, dstack will use the default service account.

If you plan to use shared reservations, the `compute.reservations.list`

permission is required in the project that owns the reservations.

??? info "Required APIs"

First, ensure the required APIs are enabled in your GCP `project_id`.

```shell

PROJECT_ID=...

gcloud config set project $PROJECT_ID

gcloud services enable cloudapis.googleapis.com

gcloud services enable compute.googleapis.com

```

??? info "VPC"

=== "VPC"

```yaml

projects:

- name: main

backends:

- type: gcp

project_id: gcp-project-id

creds:

type: default

vpc_name: my-custom-vpc

```

If you specify a non-default VPC, ensure it has a firewall rule

allowing all traffic within the VPC. This is needed for multi-node tasks to work.

The default VPC already permits traffic within the VPC.

=== "Shared VPC"

```yaml

projects:

- name: main

backends:

- type: gcp

project_id: gcp-project-id

creds:

type: default

vpc_name: my-custom-vpc

vpc_project_id: another-project-id

```

When using a Shared VPC, ensure there is a firewall rule allowing `INGRESS` traffic on port `22`.

You can limit this rule to `dstack` instances using the `dstack-runner-instance` target tag.

When using GCP gateways with a Shared VPC, also ensure there is a firewall rule allowing `INGRESS` traffic on ports `22`, `80`, `443`.

You can limit this rule to `dstack` gateway instances using the `dstack-gateway-instance` target tag.

To use TPUs with a Shared VPC, you need to grant the TPU Service Account in your service project permissions

to manage resources in the host project by granting the "TPU Shared VPC Agent" (roles/tpu.xpnAgent) role

([more in the GCP docs](https://cloud.google.com/tpu/docs/shared-vpc-networks#vpc-shared-vpc)).

??? info "Private subnets"

By default, `dstack` provisions instances with public IPs and permits inbound SSH traffic.

If you want `dstack` to use private subnets and provision instances without public IPs, set `public_ips` to `false`.

```yaml

projects:

- name: main

backends:

- type: gcp

creds:

type: default

public_ips: false

```

Using private subnets assumes that both the `dstack` server and users can access the configured VPC's private subnets.

Additionally, [Cloud NAT](https://cloud.google.com/nat/docs/overview) must be configured to provide access to external resources for provisioned instances.

### Lambda

Log into your [Lambda Cloud :material-arrow-top-right-thin:{ .external }](https://lambdalabs.com/service/gpu-cloud) account, click API keys in the sidebar, and then click the `Generate API key`

button to create a new API key.

Then, go ahead and configure the backend:

```yaml

projects:

- name: main

backends:

- type: lambda

creds:

type: api_key

api_key: eersct_yrpiey-naaeedst-tk-_cb6ba38e1128464aea9bcc619e4ba2a5.iijPMi07obgt6TZ87v5qAEj61RVxhd0p

```

### Nebius

Log into your [Nebius AI Cloud :material-arrow-top-right-thin:{ .external }](https://console.eu.nebius.com/) account, navigate to Access, and select Service Accounts. Create a service account, add it to the editors group, and upload its authorized key.

Then configure the backend:

```yaml

projects:

- name: main

backends:

- type: nebius

creds:

type: service_account

service_account_id: serviceaccount-e00dhnv9ftgb3cqmej

public_key_id: publickey-e00ngaex668htswqy4

private_key_file: ~/path/to/key.pem

```

??? info "Credentials file"

It's also possible to configure the `nebius` backend using a credentials file [generated :material-arrow-top-right-thin:{ .external }](https://docs.nebius.com/iam/service-accounts/authorized-keys#create){:target="_blank"} by the `nebius` CLI:

```shell

$ nebius iam auth-public-key generate \

--service-account-id \

--output ~/.nebius/sa-credentials.json

```

```yaml

projects:

- name: main

backends:

- type: nebius

creds:

type: service_account

filename: ~/.nebius/sa-credentials.json

```

??? info "User interface"

If you are configuring the `nebius` backend on the [project settigns page](projects.md#backends),

specify the contents of the private key file in `private_key_content`:

```yaml

type: nebius

creds:

type: service_account

service_account_id: serviceaccount-e00dhnv9ftgb3cqmej

public_key_id: publickey-e00ngaex668htswqy4

private_key_content: |

-----BEGIN PRIVATE KEY-----

MIIJQQIBADANBgkqhkiG9w0BAQEFAASCCSswggknAgEAAoICAQChwQ5OOhy60N7m

cPx/9M0oRUyJdRRv2nCALbdU/wSDOo8o5N7sP63zCaxXPeKwLNEzneMd/U0gWSv2

[...]

8y1qYDPKQ8LR+DPCUmyhM2I8t6673Vz3GrtEjkLhgQo/KqOVb3yiBFVfkA5Jov5s

kO7y4T0ynsI8b6wlhCukQTLpIYJ5

-----END PRIVATE KEY-----

```

??? info "Projects"

If you have multiple projects per region, specify which ones to use, at most one per region.

```yaml

type: nebius

projects:

- project-e00jt6t095t1ahrg4re30

- project-e01iahuh3cklave4ao1nv

creds:

type: service_account

service_account_id: serviceaccount-e00dhnv9ftgb3cqmej

public_key_id: publickey-e00ngaex668htswqy4

private_key_file: ~/path/to/key.pem

```

!!! info "Python version"

Nebius is only supported if `dstack server` is running on Python 3.10 or higher.

### Vultr

Log into your [Vultr :material-arrow-top-right-thin:{ .external }](https://www.vultr.com/) account, click `Account` in the sidebar, select `API`, find the `Personal Access Token` panel and click the `Enable API` button. In the `Access Control` panel, allow API requests from all addresses or from the subnet where your `dstack` server is deployed.

Then, go ahead and configure the backend:

```yaml

projects:

- name: main

backends:

- type: vultr

creds:

type: api_key

api_key: B57487240a466624b48de22865589

```

### CUDO

Log into your [CUDO Compute :material-arrow-top-right-thin:{ .external }](https://compute.cudo.org/) account, click API keys in the sidebar, and click the `Create an API key` button.

Ensure you've created a project with CUDO Compute, then proceed to configuring the backend.

```yaml

projects:

- name: main

backends:

- type: cudo

project_id: my-cudo-project

creds:

type: api_key

api_key: 7487240a466624b48de22865589

```

### OCI

There are two ways to configure OCI: using client credentials or using the default credentials.

=== "Default credentials"

If you have default credentials set up in `~/.oci/config`, configure the backend like this:

```yaml

projects:

- name: main

backends:

- type: oci

creds:

type: default

```

=== "Client credentials"

Log into the [OCI Console :material-arrow-top-right-thin:{ .external }](https://cloud.oracle.com), go to `My profile`,

select `API keys`, and click `Add API key`.

Once you add a key, you'll see the configuration file. Copy its values to configure the backend as follows:

```yaml

projects:

- name: main

backends:

- type: oci

creds:

type: client

user: ocid1.user.oc1..g5vlaeqfu47akmaafq665xsgmyaqjktyfxtacfxc4ftjxuca7aohnd2ev66m

tenancy: ocid1.tenancy.oc1..ajqsftvk4qarcfaak3ha4ycdsaahxmaita5frdwg3tqo2bcokpd3n7oizwai

region: eu-frankfurt-1

fingerprint: 77:32:77:00:49:7c:cb:56:84:75:8e:77:96:7d:53:17

key_file: ~/.oci/private_key.pem

```

Make sure to include either the path to your private key via `key_file` or the contents of the key via `key_content`.

??? info "Required permissions"

This is an example of a restrictive policy for a group of `dstack` users:

```

Allow group

```yaml

projects:

- name: main

backends:

- type: datacrunch

creds:

type: api_key

client_id: xfaHBqYEsArqhKWX-e52x3HH7w8T

client_secret: B5ZU5Qx9Nt8oGMlmMhNI3iglK8bjMhagTbylZy4WzncZe39995f7Vxh8

```

### AMD Developer Cloud

Log into your [AMD Developer Cloud :material-arrow-top-right-thin:{ .external }](https://amd.digitalocean.com/login) account. Click `API` in the sidebar and click the button `Generate New Token`.

Then, go ahead and configure the backend:

```yaml

projects:

- name: main

backends:

- type: amddevcloud

project_name: my-amd-project

creds:

type: api_key

api_key: ...

```

??? info "Project"

If `project_name` is not set, the default project will be used.

??? info "Required permissions"

The API key must have the following scopes assigned:

* `account` - read

* `droplet` - create, read, update, delete, admin

* `project` - create, read, update, delete

* `regions` - read

* `sizes` - read

* `ssh_key` - create, read, update, delete

### Digital Ocean

Log into your [Digital Ocean :material-arrow-top-right-thin:{ .external }](https://cloud.digitalocean.com/login) account. Click `API` in the sidebar and click the button `Generate New Token`.

Then, go ahead and configure the backend:

```yaml

projects:

- name: main

backends:

- type: digitalocean

project_name: my-digital-ocean-project

creds:

type: api_key

api_key: ...

```

??? info "Project"

If `project_name` is not set, the default project will be used.

??? info "Required permissions"

The API key must have the following scopes assigned:

* `account` - read

* `droplet` - create, read, update, delete, admin

* `project` - create, read, update, delete

* `regions` - read

* `sizes` - read

* `ssh_key` - create, read, update,delete

### Hot Aisle

Log in to the SSH TUI as described in the [Hot Aisle Quick Start :material-arrow-top-right-thin:{ .external }](https://hotaisle.xyz/quick-start/).

Create a new team and generate an API key for the member in the team.

Then, go ahead and configure the backend:

```yaml

projects:

- name: main

backends:

- type: hotaisle

team_handle: hotaisle-team-handle

creds:

type: api_key

api_key: 9c27a4bb7a8e472fae12ab34.3f2e3c1db75b9a0187fd2196c6b3e56d2b912e1c439ba08d89e7b6fcd4ef1d3f

```

??? info "Required permissions"

The API key must have the following roles assigned:

* **Owner role for the user** - Required for creating and managing SSH keys

* **Operator role for the team** - Required for managing virtual machines within the team

### CloudRift

Log into your [CloudRift :material-arrow-top-right-thin:{ .external }](https://console.cloudrift.ai/) console, click `API Keys` in the sidebar and click the button to create a new API key.

Ensure you've created a project with CloudRift.

Then proceed to configuring the backend.

```yaml

projects:

- name: main

backends:

- type: cloudrift

creds:

type: api_key

api_key: rift_2prgY1d0laOrf2BblTwx2B2d1zcf1zIp4tZYpj5j88qmNgz38pxNlpX3vAo

```

## Container-based

Container-based backends allow `dstack` to orchestrate container-based runs either directly on cloud providers that support containers or on Kubernetes.

In this case, `dstack` delegates provisioning to the cloud provider or Kubernetes.

Compared to [VM-based](#vm-based) backends, they offer less fine-grained control over provisioning but rely on the native logic of the underlying environment, whether that’s a cloud provider or Kubernetes.

### Kubernetes

Regardless of whether it’s on-prem Kubernetes or managed, `dstack` can orchestrate container-based runs across your clusters.

To use the `kubernetes` backend with `dstack`, you need to configure it with the path to the kubeconfig file, the IP address of any node in the cluster, and the port that `dstack` will use for proxying SSH traffic.

```yaml

projects:

- name: main

backends:

- type: kubernetes

kubeconfig:

filename: ~/.kube/config

proxy_jump:

hostname: 204.12.171.137

port: 32000

```

??? info "Proxy jump"

To allow the `dstack` server and CLI to access runs via SSH, `dstack` requires a node that acts as a jump host to proxy SSH traffic into containers.

To configure this node, specify `hostname` and `port` under the `proxy_jump` property:

- `hostname` — the IP address of any cluster node selected as the jump host. Both the `dstack` server and CLI must be able to reach it. This node can be either a GPU node or a CPU-only node — it makes no difference.

- `port` — any accessible port on that node, which `dstack` uses to forward SSH traffic.

No additional setup is required — `dstack` configures and manages the proxy automatically.

??? info "NVIDIA GPU Operator"

For `dstack` to correctly detect GPUs in your Kubernetes cluster, the cluster must have the

[NVIDIA GPU Operator :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/datacenter/cloud-native/gpu-operator/latest/index.html){:target="_blank"} pre-installed.

??? info "Required permissions"

The following Kubernetes permissions are sufficient for `dstack` to work:

```yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: dstack-backend

rules:

- apiGroups: [""]

resources: ["namespaces"]

verbs: ["get", "create"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "create", "delete"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get", "create", "delete"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

```

Ensure you've created a ClusterRoleBinding to grant the role to the user or the service account you're using.

> To learn more, see the [Kubernetes](../guides/kubernetes.md) guide.

### RunPod

Log into your [RunPod :material-arrow-top-right-thin:{ .external }](https://www.runpod.io/console/) console, click Settings in the sidebar, expand the `API Keys` section, and click

the button to create a Read & Write key.

Then proceed to configuring the backend.

```yaml

projects:

- name: main

backends:

- type: runpod

creds:

type: api_key

api_key: US9XTPDIV8AR42MMINY8TCKRB8S4E7LNRQ6CAUQ9

```

??? info "Community Cloud"

By default, `dstack` considers instance offers from both the Secure Cloud and the

[Community Cloud :material-arrow-top-right-thin:{ .external }](https://docs.runpod.io/references/faq/#secure-cloud-vs-community-cloud).

You can tell them apart by their regions.

Secure Cloud regions contain datacenter IDs such as `CA-MTL-3`.

Community Cloud regions contain country codes such as `CA`.

```shell

$ dstack apply -f .dstack.yml -b runpod

# BACKEND REGION INSTANCE SPOT PRICE

1 runpod CA NVIDIA A100 80GB PCIe yes $0.6

2 runpod CA-MTL-3 NVIDIA A100 80GB PCIe yes $0.82

```

If you don't want to use the Community Cloud, set `community_cloud: false` in the backend settings.

```yaml

projects:

- name: main

backends:

- type: runpod

creds:

type: api_key

api_key: US9XTPDIV8AR42MMINY8TCKRB8S4E7LNRQ6CAUQ9

community_cloud: false

```

### Vast.ai

Log into your [Vast.ai :material-arrow-top-right-thin:{ .external }](https://cloud.vast.ai/) account, click Account in the sidebar, and copy your

API Key.

Then, go ahead and configure the backend:

```yaml

projects:

- name: main

backends:

- type: vastai

creds:

type: api_key

api_key: d75789f22f1908e0527c78a283b523dd73051c8c7d05456516fc91e9d4efd8c5

```

Also, the `vastai` backend supports on-demand instances only. Spot instance support coming soon.

## On-prem

In on-prem environments, the [Kubernetes](#kubernetes) backend can be used if a Kubernetes cluster is already set up and configured.

However, often [SSH fleets](../concepts/fleets.md#ssh-fleets) are a simpler and lighter alternative.

### SSH fleets

SSH fleets require no backend configuration.

All you need to do is [provide hostnames and SSH credentials](../concepts/fleets.md#ssh-fleets), and `dstack` sets up a fleet that can orchestrate container-based runs on your servers.

SSH fleets support the same features as [VM-based](#vm-based) backends.

!!! info "What's next"

1. See the [`~/.dstack/server/config.yml`](../reference/server/config.yml.md) reference

2. Check [Projects](../concepts/projects.md)

## Fleets

# Fleets

Fleets act both as pools of instances and as templates for how those instances are provisioned.

`dstack` supports two kinds of fleets:

* [Backend fleets](#backend-fleets) – dynamically provisioned through configured backends; they are supported with any type of backends: [VM-based](backends.md#vm-based) and [container-based](backends.md#container-based) (incl. [`kubernetes`](backends.md#kubernetes))

* [SSH fleets](#ssh-fleets) – created using on-prem servers; do not require backends

When you run `dstack apply` to start a dev environment, task, or service, `dstack` will reuse idle instances from an existing fleet whenever available.

## Backend fleets

If you configured [backends](backends.md), `dstack` can provision fleets on the fly.

However, it’s recommended to define fleets explicitly.

### Apply a configuration

To create a backend fleet, define a configuration as a YAML file in your project directory. The file must have a

`.dstack.yml` extension (e.g. `.dstack.yml` or `fleet.dstack.yml`).

```yaml

type: fleet

# The name is optional, if not specified, generated randomly

name: default-fleet

# Can be a range or a fixed number

# Allow to provision of up to 2 instances

nodes: 0..2

# Uncomment to ensure instances are inter-connected

#placement: cluster

# Deprovision instances above the minimum if they remain idle

idle_duration: 1h

resources:

# Allow to provision up to 8 GPUs

gpu: 0..8

```

To create or update the fleet, pass the fleet configuration to [`dstack apply`](../reference/cli/dstack/apply.md):

```shell

$ dstack apply -f examples/misc/fleets/.dstack.yml

Provisioning...

---> 100%

FLEET INSTANCE BACKEND GPU PRICE STATUS CREATED

my-fleet - - - - - -

```

`dstack` always keeps the minimum number of nodes provisioned. Additional instances, up to the maximum limit, are provisioned on demand.

!!! info "Container-based backends"

For [container-based](backends.md#container-based) backends (such as `kubernetes`, `runpod`, etc), `nodes` must be defined as a range starting with `0`. In these cases, instances are provisioned on demand as needed.

??? info "Target number of nodes"

If `nodes` is defined as a range, you can start with more than the minimum number of instances by using the `target` parameter when creating the fleet.

```yaml

type: fleet

name: my-fleet

nodes:

min: 0

max: 2

# Provision 2 instances initially

target: 2

# Deprovision instances above the minimum if they remain idle

idle_duration: 1h

```

By default, when you submit a [dev environment](dev-environments.md), [task](tasks.md), or [service](services.md), `dstack` tries all available fleets. However, you can explicitly specify the [`fleets`](../reference/dstack.yml/dev-environment.md#fleets) in your run configuration

or via [`--fleet`](../reference/cli/dstack/apply.md#fleet) with `dstack apply`.

### Configuration options

#### Placement { #backend-placement }

To ensure instances are interconnected (e.g., for

[distributed tasks](tasks.md#distributed-tasks)), set `placement` to `cluster`.

This ensures all instances are provisioned with optimal inter-node connectivity.

??? info "AWS"

When you create a fleet with AWS, [Elastic Fabric Adapter networking :material-arrow-top-right-thin:{ .external }](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/efa.html){:target="_blank"} is automatically configured if it’s supported for the corresponding instance type.

Note, EFA requires the `public_ips` to be set to `false` in the `aws` backend configuration.

Otherwise, instances are only connected by the default VPC subnet.

Refer to the [EFA](../../examples/clusters/efa/index.md) example for more details.

??? info "GCP"

When you create a fleet with GCP, `dstack` automatically configures [GPUDirect-TCPXO and GPUDirect-TCPX :material-arrow-top-right-thin:{ .external }](https://cloud.google.com/kubernetes-engine/docs/how-to/gpu-bandwidth-gpudirect-tcpx-autopilot){:target="_blank"} networking for the A3 Mega and A3 High instance types, as well as RoCE networking for the A4 instance type.

!!! info "Backend configuration"

You may need to configure `extra_vpcs` and `roce_vpcs` in the `gcp` backend configuration.

Refer to the [A4](../../examples/clusters/a4/index.md),

[A3 Mega](../../examples/clusters/a3mega/index.md), and

[A3 High](../../examples/clusters/a3high/index.md) examples for more details.

??? info "Nebius"

When you create a fleet with Nebius, [InfiniBand networking :material-arrow-top-right-thin:{ .external }](https://docs.nebius.com/compute/clusters/gpu){:target="_blank"} is automatically configured if it’s supported for the corresponding instance type.

Otherwise, instances are only connected by the default VPC subnet.

An InfiniBand fabric for the cluster is selected automatically. If you prefer to use some specific fabrics, configure them in the

[backend settings](../reference/server/config.yml.md#nebius).

The `cluster` placement is supported for `aws`, `azure`, `gcp`, `nebius`, `oci`, and `vultr`

backends.

> For more details on optimal inter-node connectivity, read the [Clusters](../guides/clusters.md) guide.

#### Resources

When you specify a resource value like `cpu` or `memory`,

you can either use an exact value (e.g. `24GB`) or a

range (e.g. `24GB..`, or `24GB..80GB`, or `..80GB`).

```yaml

type: fleet

# The name is optional, if not specified, generated randomly

name: my-fleet

nodes: 2

resources:

# 200GB or more RAM

memory: 200GB..

# 4 GPUs from 40GB to 80GB

gpu: 40GB..80GB:4

# Disk size

disk: 500GB

```

The `gpu` property allows specifying not only memory size but also GPU vendor, names

and their quantity. Examples: `nvidia` (one NVIDIA GPU), `A100` (one A100), `A10G,A100` (either A10G or A100),

`A100:80GB` (one A100 of 80GB), `A100:2` (two A100), `24GB..40GB:2` (two GPUs between 24GB and 40GB),

`A100:40GB:2` (two A100 GPUs of 40GB).

??? info "Google Cloud TPU"

To use TPUs, specify its architecture via the `gpu` property.

```yaml

type: fleet

# The name is optional, if not specified, generated randomly

name: my-fleet

nodes: 2

resources:

gpu: v2-8

```

Currently, only 8 TPU cores can be specified, supporting single TPU device workloads. Multi-TPU support is coming soon.

> If you’re unsure which offers (hardware configurations) are available from the configured backends, use the

> [`dstack offer`](../reference/cli/dstack/offer.md#list-gpu-offers) command to list them.

#### Blocks { #backend-blocks }

For backend fleets, `blocks` function the same way as in SSH fleets.

See the [`Blocks`](#ssh-blocks) section under SSH fleets for details on the blocks concept.

```yaml

type: fleet

name: my-fleet

resources:

gpu: NVIDIA:80GB:8

# Split into 4 blocks, each with 2 GPUs

blocks: 4

```

#### Idle duration

By default, fleet instances stay `idle` for 3 days and can be reused within that time.

If an instance is not reused within this period, it is automatically terminated.

To change the default idle duration, set

[`idle_duration`](../reference/dstack.yml/fleet.md#idle_duration) in the fleet configuration (e.g., `0s`, `1m`, or `off` for

unlimited).

```yaml

type: fleet

# The name is optional, if not specified, generated randomly

name: my-fleet

nodes: 2

# Terminate instances idle for more than 1 hour

idle_duration: 1h

resources:

gpu: 24GB

```

#### Spot policy

By default, `dstack` uses on-demand instances. However, you can change that

via the [`spot_policy`](../reference/dstack.yml/fleet.md#spot_policy) property. It accepts `spot`, `on-demand`, and `auto`.

#### Retry policy

By default, if `dstack` fails to provision an instance or an instance is interrupted, no retry is attempted.

If you'd like `dstack` to do it, configure the

[retry](../reference/dstack.yml/fleet.md#retry) property accordingly:

```yaml

type: fleet

# The name is optional, if not specified, generated randomly

name: my-fleet

nodes: 1

resources:

gpu: 24GB

retry:

# Retry on specific events

on_events: [no-capacity, interruption]

# Retry for up to 1 hour

duration: 1h

```

!!! info "Reference"

Backend fleets support many more configuration options,

incl. [`backends`](../reference/dstack.yml/fleet.md#backends),

[`regions`](../reference/dstack.yml/fleet.md#regions),

[`max_price`](../reference/dstack.yml/fleet.md#max_price), and

among [others](../reference/dstack.yml/fleet.md).

## SSH fleets

If you have a group of on-prem servers accessible via SSH, you can create an SSH fleet.

### Apply a configuration

Define a fleet configuration as a YAML file in your project directory. The file must have a

`.dstack.yml` extension (e.g. `.dstack.yml` or `fleet.dstack.yml`).

```yaml

type: fleet

# The name is optional, if not specified, generated randomly

name: my-fleet

# Uncomment if instances are interconnected

#placement: cluster

# SSH credentials for the on-prem servers

ssh_config:

user: ubuntu

identity_file: ~/.ssh/id_rsa

hosts:

- 3.255.177.51

- 3.255.177.52

```

??? info "Requirements"

1. Hosts must be pre-installed with Docker.

=== "NVIDIA"

2. Hosts with NVIDIA GPUs must also be pre-installed with CUDA 12.1 and

[NVIDIA Container Toolkit :material-arrow-top-right-thin:{ .external }](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html).

=== "AMD"

2. Hosts with AMD GPUs must also be pre-installed with AMDGPU-DKMS kernel driver (e.g. via

[native package manager :material-arrow-top-right-thin:{ .external }](https://rocm.docs.amd.com/projects/install-on-linux/en/latest/install/native-install/index.html)

or [AMDGPU installer :material-arrow-top-right-thin:{ .external }](https://rocm.docs.amd.com/projects/install-on-linux/en/latest/install/amdgpu-install.html).)

=== "Intel Gaudi"

2. Hosts with Intel Gaudi accelerators must be pre-installed with [Gaudi software and drivers](https://docs.habana.ai/en/latest/Installation_Guide/Driver_Installation.html#driver-installation).

This must include the drivers, `hl-smi`, and Habana Container Runtime.

=== "Tenstorrent"

2. Hosts with Tenstorrent accelerators must be pre-installed with [Tenstorrent software](https://docs.tenstorrent.com/getting-started/README.html#software-installation).

This must include the drivers, `tt-smi`, and HugePages.

3. The user specified must have passwordless `sudo` access.

4. The SSH server must be running and configured with `AllowTcpForwarding yes` in `/etc/ssh/sshd_config`.

5. The firewall must allow SSH and should forbid any other connections from external networks. For `placement: cluster` fleets, it should also allow any communication between fleet nodes.

To create or update the fleet, pass the fleet configuration to [`dstack apply`](../reference/cli/dstack/apply.md):

```shell

$ dstack apply -f examples/misc/fleets/.dstack.yml

Provisioning...

---> 100%

FLEET INSTANCE GPU PRICE STATUS CREATED

my-fleet 0 L4:24GB (spot) $0 idle 3 mins ago

1 L4:24GB (spot) $0 idle 3 mins ago

```

When you apply, `dstack` connects to the specified hosts using the provided SSH credentials,

installs the dependencies, and configures these hosts as a fleet.

Once the status of instances changes to `idle`, they can be used by dev environments, tasks, and services.

### Configuration options

#### Placement { #ssh-placement }

If the hosts are interconnected (i.e. share the same network), set `placement` to `cluster`.

This is required if you'd like to use the fleet for [distributed tasks](tasks.md#distributed-tasks).

??? info "Network"

By default, `dstack` automatically detects the network shared by the hosts.

However, it's possible to configure it explicitly via

the [`network`](../reference/dstack.yml/fleet.md#network) property.

[//]: # (TODO: Provide an example and more detail)

> For more details on optimal inter-node connectivity, read the [Clusters](../guides/clusters.md) guide.

#### Blocks { #ssh-blocks }

By default, a job uses the entire instance—e.g., all 8 GPUs. To allow multiple jobs on the same instance, set the `blocks` property to divide the instance. Each job can then use one or more blocks, up to the full instance.

```yaml

type: fleet

name: my-fleet

ssh_config:

user: ubuntu

identity_file: ~/.ssh/id_rsa

hosts:

- hostname: 3.255.177.51

blocks: 4

- hostname: 3.255.177.52

# As many as possible, according to numbers of GPUs and CPUs

blocks: auto

- hostname: 3.255.177.53

# Do not slice. This is the default value, may be omitted

blocks: 1

```

All resources (GPU, CPU, memory) are split evenly across blocks, while disk is shared.

For example, with 8 GPUs, 128 CPUs, and 2TB RAM, setting `blocks` to `8` gives each block 1 GPU, 16 CPUs, and 256 GB RAM.

Set `blocks` to `auto` to match the number of blocks to the number of GPUs.

!!! info "Distributed tasks"

Distributed tasks require exclusive access to all host resources and therefore must use all blocks on each node.

#### Environment variables

If needed, you can specify environment variables that will be used by `dstack-shim` and passed to containers.

[//]: # (TODO: Explain what dstack-shim is)

For example, these variables can be used to configure a proxy:

```yaml

type: fleet

name: my-fleet

env:

- HTTP_PROXY=http://proxy.example.com:80

- HTTPS_PROXY=http://proxy.example.com:80

- NO_PROXY=localhost,127.0.0.1

ssh_config:

user: ubuntu

identity_file: ~/.ssh/id_rsa

hosts:

- 3.255.177.51

- 3.255.177.52

```

#### Proxy jump

If fleet hosts are behind a head node (aka "login node"), configure [`proxy_jump`](../reference/dstack.yml/fleet.md#proxy_jump):

```yaml

type: fleet

name: my-fleet

ssh_config:

user: ubuntu

identity_file: ~/.ssh/worker_node_key

hosts:

- 3.255.177.51

- 3.255.177.52

proxy_jump:

hostname: 3.255.177.50

user: ubuntu

identity_file: ~/.ssh/head_node_key

```

To be able to attach to runs, both explicitly with `dstack attach` and implicitly with `dstack apply`, you must either

add a front node key (`~/.ssh/head_node_key`) to an SSH agent or configure a key path in `~/.ssh/config`:

```

Host 3.255.177.50

IdentityFile ~/.ssh/head_node_key

```

where `Host` must match `ssh_config.proxy_jump.hostname` or `ssh_config.hosts[n].proxy_jump.hostname` if you configure head nodes

on a per-worker basis.

!!! info "Reference"

For all SSH fleet configuration options, refer to the [reference](../reference/dstack.yml/fleet.md).

#### Troubleshooting

!!! info "Resources"

Once the fleet is created, double-check that the GPU, memory, and disk are detected correctly.

If the status does not change to `idle` after a few minutes or the resources are not displayed correctly, ensure that

all host requirements are satisfied.

If the requirements are met but the fleet still fails to be created correctly, check the logs at

`/root/.dstack/shim.log` on the hosts for error details.

## Manage fleets

### List fleets

The [`dstack fleet`](../reference/cli/dstack/fleet.md#dstack-fleet-list) command lists fleet instances and their status:

```shell

$ dstack fleet

FLEET INSTANCE BACKEND GPU PRICE STATUS CREATED

my-fleet 0 gcp (europe-west-1) L4:24GB (spot) $0.1624 idle 3 mins ago

1 gcp (europe-west-1) L4:24GB (spot) $0.1624 idle 3 mins ago

```

### Delete fleets

When a fleet isn't used by a run, you can delete it by passing the fleet configuration to `dstack delete`:

```shell

$ dstack delete -f cluster.dstack.yaml

Delete the fleet my-gcp-fleet? [y/n]: y

Fleet my-gcp-fleet deleted

```

Alternatively, you can delete a fleet by passing the fleet name to `dstack fleet delete`.

To terminate and delete specific instances from a fleet, pass `-i INSTANCE_NUM`.

!!! info "What's next?"

1. Check [dev environments](dev-environments.md), [tasks](tasks.md), and

[services](services.md)

2. Read the [Clusters](../guides/clusters.md) guide

## Dev environments

# Dev environments

A dev environment lets you provision an instance and access it with your desktop IDE.

## Apply a configuration

First, define a dev environment configuration as a YAML file in your project folder.

The filename must end with `.dstack.yml` (e.g. `.dstack.yml` or `dev.dstack.yml` are both acceptable).

```yaml

type: dev-environment

# The name is optional, if not specified, generated randomly

name: vscode

python: "3.11"

# Uncomment to use a custom Docker image

#image: huggingface/trl-latest-gpu

ide: vscode

# Uncomment to leverage spot instances

#spot_policy: auto

resources:

gpu: 24GB

```

To run a dev environment, pass the configuration to [`dstack apply`](../reference/cli/dstack/apply.md):

```shell

$ dstack apply -f examples/.dstack.yml

# BACKEND REGION RESOURCES SPOT PRICE

1 runpod CA-MTL-1 9xCPU, 48GB, A5000:24GB yes $0.11

2 runpod EU-SE-1 9xCPU, 43GB, A5000:24GB yes $0.11

3 gcp us-west4 4xCPU, 16GB, L4:24GB yes $0.214516

Submit the run vscode? [y/n]: y

Launching `vscode`...

---> 100%

To open in VS Code Desktop, use this link:

vscode://vscode-remote/ssh-remote+vscode/workflow

```

`dstack apply` automatically provisions an instance and sets up an IDE on it.

??? info "Windows"

On Windows, `dstack` works both natively and inside WSL. But, for dev environments,

it's recommended _not to use_ `dstack apply` _inside WSL_ due to a [VS Code issue :material-arrow-top-right-thin:{ .external }](https://github.com/microsoft/vscode-remote-release/issues/937){:target="_blank"}.

To open the dev environment in your desktop IDE, use the link from the output

(such as `vscode://vscode-remote/ssh-remote+fast-moth-1/workflow`).

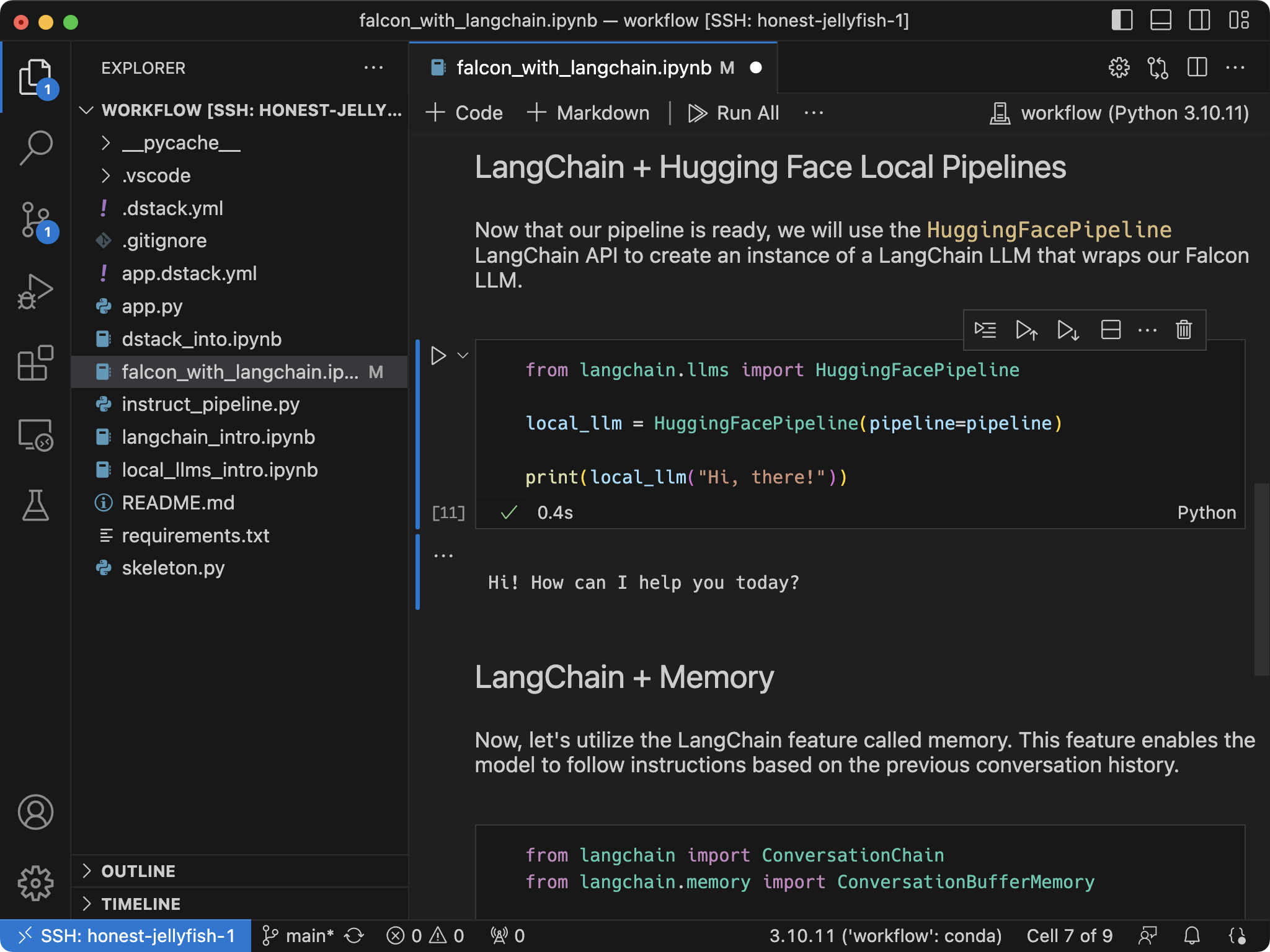

{ width=800 }

??? info "SSH"

Alternatively, while the CLI is attached to the run, you can connect to the dev environment via SSH:

```shell

$ ssh vscode

```

## Configuration options

### Initialization

If you want to pre-configure the dev environment, specify the [`init`](../reference/dstack.yml/dev-environment.md#init)

property with a list of commands to run at startup:

```yaml

type: dev-environment

name: vscode

python: "3.11"

ide: vscode

init:

- pip install wandb

```

### Resources

When you specify a resource value like `cpu` or `memory`,

you can either use an exact value (e.g. `24GB`) or a

range (e.g. `24GB..`, or `24GB..80GB`, or `..80GB`).

```yaml

type: dev-environment

# The name is optional, if not specified, generated randomly

name: vscode

ide: vscode

resources:

# 16 or more x86_64 cores

cpu: 16..

# 200GB or more RAM

memory: 200GB..

# 4 GPUs from 40GB to 80GB

gpu: 40GB..80GB:4

# Shared memory (required by multi-gpu)

shm_size: 16GB

# Disk size

disk: 500GB

```

The `cpu` property lets you set the architecture (`x86` or `arm`) and core count — e.g., `x86:16` (16 x86 cores), `arm:8..` (at least 8 ARM cores).

If not set, `dstack` infers it from the GPU or defaults to `x86`.

The `gpu` property lets you specify vendor, model, memory, and count — e.g., `nvidia` (one NVIDIA GPU), `A100` (one A100), `A10G,A100` (either), `A100:80GB` (one 80GB A100), `A100:2` (two A100), `24GB..40GB:2` (two GPUs with 24–40GB), `A100:40GB:2` (two 40GB A100s).

If vendor is omitted, `dstack` infers it from the model or defaults to `nvidia`.

??? info "Shared memory"

If you are using parallel communicating processes (e.g., dataloaders in PyTorch), you may need to configure

`shm_size`, e.g. set it to `16GB`.

> If you’re unsure which offers (hardware configurations) are available from the configured backends, use the

> [`dstack offer`](../reference/cli/dstack/offer.md#list-gpu-offers) command to list them.

### Docker

#### Default image

If you don't specify `image`, `dstack` uses its [base :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/tree/master/docker/base){:target="_blank"} Docker image pre-configured with

`uv`, `python`, `pip`, essential CUDA drivers, `mpirun`, and NCCL tests (under `/opt/nccl-tests/build`).

Set the `python` property to pre-install a specific version of Python.

```yaml

type: dev-environment

name: vscode

python: 3.12

ide: vscode

```

#### NVCC

By default, the base Docker image doesn’t include `nvcc`, which is required for building custom CUDA kernels.

If you need `nvcc`, set the [`nvcc`](../reference/dstack.yml/dev-environment.md#nvcc) property to true.

```yaml

type: dev-environment

name: vscode

python: 3.12

nvcc: true

ide: vscode

init:

- uv pip install flash_attn --no-build-isolation

```

#### Custom image

If you want, you can specify your own Docker image via `image`.

```yaml

type: dev-environment

name: vscode

image: huggingface/trl-latest-gpu

ide: vscode

```

#### Docker in Docker

Set `docker` to `true` to enable the `docker` CLI in your dev environment, e.g., to run or build Docker images, or use Docker Compose.

```yaml

type: dev-environment

name: vscode

docker: true

ide: vscode

init:

- docker run --gpus all nvidia/cuda:12.3.0-base-ubuntu22.04 nvidia-smi

```

Cannot be used with `python` or `image`. Not supported on `runpod`, `vastai`, or `kubernetes`.

#### Privileged mode

To enable privileged mode, set [`privileged`](../reference/dstack.yml/dev-environment.md#privileged) to `true`.

Not supported with `runpod`, `vastai`, and `kubernetes`.

#### Private registry

Use the [`registry_auth`](../reference/dstack.yml/dev-environment.md#registry_auth) property to provide credentials for a private Docker registry.

```yaml

type: dev-environment

name: vscode

env:

- NGC_API_KEY

image: nvcr.io/nim/deepseek-ai/deepseek-r1-distill-llama-8b

registry_auth:

username: $oauthtoken

password: ${{ env.NGC_API_KEY }}

ide: vscode

```

### Environment variables

```yaml

type: dev-environment

name: vscode

env:

- HF_TOKEN

- HF_HUB_ENABLE_HF_TRANSFER=1

ide: vscode

```

If you don't assign a value to an environment variable (see `HF_TOKEN` above),

`dstack` will require the value to be passed via the CLI or set in the current process.

??? info "System environment variables"

The following environment variables are available in any run by default:

| Name | Description |

|-------------------------|--------------------------------------------------|

| `DSTACK_RUN_NAME` | The name of the run |

| `DSTACK_REPO_ID` | The ID of the repo |

| `DSTACK_GPUS_NUM` | The total number of GPUs in the run |

| `DSTACK_WORKING_DIR` | The working directory of the run |

| `DSTACK_REPO_DIR` | The directory where the repo is mounted (if any) |

### Working directory

If `working_dir` is not specified, it defaults to `/workflow`.

The `working_dir` must be an absolute path. The tilde (`~`) is supported (e.g., `~/my-working-dir`).

### Files

Sometimes, when you run a dev environment, you may want to mount local files. This is possible via the [`files`](../reference/dstack.yml/task.md#_files) property. Each entry maps a local directory or file to a path inside the container.

```yaml

type: dev-environment

name: vscode

files:

- .:examples # Maps the directory where `.dstack.yml` to `/workflow/examples`

- ~/.ssh/id_rsa:/root/.ssh/id_rsa # Maps `~/.ssh/id_rsa` to `/root/.ssh/id_rsa`

ide: vscode

```

If the local path is relative, it’s resolved relative to the configuration file.

If the container path is relative, it’s resolved relative to `/workflow`.

The container path is optional. If not specified, it will be automatically calculated:

```yaml

type: dev-environment

name: vscode

files:

- ../examples # Maps `examples` (the parent directory of `.dstack.yml`) to `/workflow/examples`

- ~/.ssh/id_rsa # Maps `~/.ssh/id_rsa` to `/root/.ssh/id_rsa`

ide: vscode

```

??? info "File size"

Whether its a file or folder, each entry is limited to 2MB. To avoid exceeding this limit, make sure to exclude unnecessary files

by listing it via `.gitignore` or `.dstackignore`.

The 2MB upload limit can be increased by setting the `DSTACK_SERVER_CODE_UPLOAD_LIMIT` environment variable.

### Repos

Sometimes, you may want to mount an entire Git repo inside the container.

Imagine you have a cloned Git repo containing an `examples` subdirectory with a `.dstack.yml` file:

```yaml

type: dev-environment

name: vscode

repos:

# Mounts the parent directory of `examples` (must be a Git repo)

# to `/workflow` (the default working directory)

- ..

ide: vscode

```

When you run it, `dstack` fetches the repo on the instance, applies your local changes, and mounts it—so the container matches your local repo.

The local path can be either relative to the configuration file or absolute.

??? info "Repo directory"

By default, `dstack` mounts the repo to `/workflow` (the default working directory).

You can override the repo directory using either a relative or an absolute path:

```yaml

type: dev-environment

name: vscode

repos:

# Mounts the parent directory of `examples` (must be a Git repo)

# to `/my-repo`

- ..:/my-repo

ide: vscode

```

If the path is relative, it is resolved against [working directory](#working-directory).

??? info "Repo size"

The repo size is not limited. However, local changes are limited to 2MB.

To avoid exceeding this limit, exclude unnecessary files using `.gitignore` or `.dstackignore`.

You can increase the 2MB limit by setting the `DSTACK_SERVER_CODE_UPLOAD_LIMIT` environment variable.

??? info "Repo URL"

Sometimes you may want to mount a Git repo without cloning it locally. In this case, simply provide a URL in `repos`:

```yaml

type: dev-environment

name: vscode

repos:

# Clone the specified repo to `/workflow` (the default working directory)

- https://github.com/dstackai/dstack

ide: vscode

```

??? info "Private repos"

If a Git repo is private, `dstack` will automatically try to use your default Git credentials (from

`~/.ssh/config` or `~/.config/gh/hosts.yml`).

If you want to use custom credentials, you can provide them with [`dstack init`](../reference/cli/dstack/init.md).

> Currently, you can configure up to one repo per run configuration.

### Retry policy

By default, if `dstack` can't find capacity or the instance is interrupted, the run will fail.

If you'd like `dstack` to automatically retry, configure the

[retry](../reference/dstack.yml/dev-environment.md#retry) property accordingly:

```yaml

type: dev-environment

# The name is optional, if not specified, generated randomly

name: vscode

ide: vscode

retry:

# Retry on specific events

on_events: [no-capacity, error, interruption]

# Retry for up to 1 hour

duration: 1h

```

!!! info "Retry duration"

The duration period is calculated as a run age for `no-capacity` event

and as a time passed since the last `interruption` and `error` for `interruption` and `error` events.

### Inactivity duration

Set [`inactivity_duration`](../reference/dstack.yml/dev-environment.md#inactivity_duration)

to automatically stop the dev environment after a configured period of inactivity.

```yaml

type: dev-environment

name: vscode

ide: vscode

# Stop if inactive for 2 hours

inactivity_duration: 2h

```

The dev environment becomes inactive when you close the remote VS Code window,

close any `ssh

```shell

$ dstack ps -v

NAME BACKEND RESOURCES PRICE STATUS SUBMITTED

vscode cudo 2xCPU, 8GB, $0.0286 running 8 mins ago

100.0GB (disk) (inactive for 2m 34s)

```

If you reattach to the dev environment using [`dstack attach`](../reference/cli/dstack/attach.md),

the inactivity timer will be reset within a few seconds.

??? info "In-place update"

As long as the configuration defines the `name` property, the value of `inactivity_duration`

can be changed for a running dev environment without a restart.

Just change the value in the configuration and run `dstack apply` again.

```shell

$ dstack apply -f .dstack.yml

Detected configuration changes that can be updated in-place: ['inactivity_duration']

Update the run? [y/n]:

```

> `inactivity_duration` is not to be confused with [`idle_duration`](#idle-duration).

> The latter determines how soon the underlying cloud instance will be terminated

> _after_ the dev environment is stopped.

### Utilization policy

Sometimes it’s useful to track whether a dev environment is fully utilizing all GPUs. While you can check this with

[`dstack metrics`](../reference/cli/dstack/metrics.md), `dstack` also lets you set a policy to auto-terminate the run if any GPU is underutilized.

Below is an example of a dev environment that auto-terminate if any GPU stays below 10% utilization for 1 hour.

```yaml

type: dev-environment

name: my-dev

python: 3.12

ide: cursor

resources:

gpu: H100:8

utilization_policy:

min_gpu_utilization: 10

time_window: 1h

```

### Schedule

Specify `schedule` to start a dev environment periodically at specific UTC times using the cron syntax:

```yaml

type: dev-environment

ide: vscode

schedule:

cron: "0 8 * * mon-fri" # at 8:00 UTC from Monday through Friday

```

The `schedule` property can be combined with `max_duration` or `utilization_policy` to shutdown the dev environment automatically when it's not needed.

??? info "Cron syntax"

`dstack` supports [POSIX cron syntax](https://pubs.opengroup.org/onlinepubs/9699919799/utilities/crontab.html#tag_20_25_07). One exception is that days of the week are started from Monday instead of Sunday so `0` corresponds to Monday.

The month and day of week fields accept abbreviated English month and weekday names (`jan–dec` and `mon–sun`) respectively.

A cron expression consists of five fields:

```

┌───────────── minute (0-59)

│ ┌───────────── hour (0-23)

│ │ ┌───────────── day of the month (1-31)

│ │ │ ┌───────────── month (1-12 or jan-dec)

│ │ │ │ ┌───────────── day of the week (0-6 or mon-sun)

│ │ │ │ │

│ │ │ │ │

│ │ │ │ │

* * * * *

```

The following operators can be used in any of the fields:

| Operator | Description | Example |

|----------|-----------------------|-------------------------------------------------------------------------|

| `*` | Any value | `0 * * * *` runs every hour at minute 0 |

| `,` | Value list separator | `15,45 10 * * *` runs at 10:15 and 10:45 every day. |

| `-` | Range of values | `0 1-3 * * *` runs at 1:00, 2:00, and 3:00 every day. |

| `/` | Step values | `*/10 8-10 * * *` runs every 10 minutes during the hours 8:00 to 10:59. |

### Spot policy

By default, `dstack` uses on-demand instances. However, you can change that

via the [`spot_policy`](../reference/dstack.yml/dev-environment.md#spot_policy) property. It accepts `spot`, `on-demand`, and `auto`.

--8<-- "docs/concepts/snippets/manage-fleets.ext"

!!! info "Reference"

Dev environments support many more configuration options,

incl. [`backends`](../reference/dstack.yml/dev-environment.md#backends),

[`regions`](../reference/dstack.yml/dev-environment.md#regions),

[`max_price`](../reference/dstack.yml/dev-environment.md#max_price), and

[`max_duration`](../reference/dstack.yml/dev-environment.md#max_duration),

among [others](../reference/dstack.yml/dev-environment.md).

--8<-- "docs/concepts/snippets/manage-runs.ext"

!!! info "What's next?"

1. Read about [tasks](tasks.md) and [services](services.md)

2. Learn how to manage [fleets](fleets.md)

## Tasks

# Tasks

A task allows you to run arbitrary commands on one or more nodes.

They are best suited for jobs like training or batch processing.

## Apply a configuration

First, define a task configuration as a YAML file in your project folder.

The filename must end with `.dstack.yml` (e.g. `.dstack.yml` or `dev.dstack.yml` are both acceptable).

[//]: # (TODO: Make tabs - single machine & distributed tasks & web app)

```yaml

type: task

# The name is optional, if not specified, generated randomly

name: trl-sft

python: 3.12

# Uncomment to use a custom Docker image

#image: huggingface/trl-latest-gpu

env:

- MODEL=Qwen/Qwen2.5-0.5B

- DATASET=stanfordnlp/imdb

commands:

- uv pip install trl

- |

trl sft \

--model_name_or_path $MODEL --dataset_name $DATASET

--num_processes $DSTACK_GPUS_PER_NODE

resources:

# One to two H100 GPUs

gpu: H100:1..2

shm_size: 24GB

```

To run a task, pass the configuration to [`dstack apply`](../reference/cli/dstack/apply.md):

```shell

$ dstack apply -f .dstack.yml

# BACKEND REGION RESOURCES SPOT PRICE

1 runpod CA-MTL-1 18xCPU, 100GB, A5000:24GB:2 yes $0.22

2 runpod EU-SE-1 18xCPU, 100GB, A5000:24GB:2 yes $0.22

3 gcp us-west4 27xCPU, 150GB, A5000:24GB:3 yes $0.33

Submit the run trl-sft? [y/n]: y

Launching `axolotl-train`...

---> 100%

{'loss': 1.4967, 'grad_norm': 1.2734375, 'learning_rate': 1.0000000000000002e-06, 'epoch': 0.0}

0% 1/24680 [00:13<95:34:17, 13.94s/it]

6% 73/1300 [00:48<13:57, 1.47it/s]

```

`dstack apply` automatically provisions instances and runs the task.

## Configuration options

!!! info "No commands"

If `commands` are not specified, `dstack` runs `image`’s entrypoint (or fails if none is set).

### Ports

A task can configure ports. In this case, if the task is running an application on a port, `dstack apply`

will securely allow you to access this port from your local machine through port forwarding.

```yaml

type: task

name: streamlit-hello

python: 3.12

commands:

- uv pip install streamlit

- streamlit hello

ports:

- 8501

```

When running it, `dstack apply` forwards `8501` port to `localhost:8501`, enabling secure access to the running

application.

### Distributed tasks

By default, a task runs on a single node.

However, you can run it on a cluster of nodes by specifying `nodes`.

```yaml

type: task

name: train-distrib

nodes: 2

python: 3.12

env:

- NCCL_DEBUG=INFO

commands:

- git clone https://github.com/pytorch/examples.git pytorch-examples

- cd pytorch-examples/distributed/ddp-tutorial-series

- uv pip install -r requirements.txt

- |

torchrun \

--nproc-per-node=$DSTACK_GPUS_PER_NODE \

--node-rank=$DSTACK_NODE_RANK \

--nnodes=$DSTACK_NODES_NUM \

--master-addr=$DSTACK_MASTER_NODE_IP \

--master-port=12345 \

multinode.py 50 10

resources:

gpu: 24GB:1..2

shm_size: 24GB

```

Nodes can communicate using their private IP addresses.

Use `DSTACK_MASTER_NODE_IP`, `DSTACK_NODES_IPS`, `DSTACK_NODE_RANK`, and other

[System environment variables](#system-environment-variables) for inter-node communication.

`dstack` is easy to use with `accelerate`, `torchrun`, Ray, Spark, and any other distributed frameworks.

!!! info "MPI"

If want to use MPI, you can set `startup_order` to `workers-first` and `stop_criteria` to `master-done`, and use `DSTACK_MPI_HOSTFILE`.

See the [NCCL](../../examples/clusters/nccl-tests/index.md) or [RCCL](../../examples/clusters/rccl-tests/index.md) examples.

> For detailed examples, see [distributed training](../../examples.md#distributed-training) examples.

??? info "Network interface"

Distributed frameworks usually detect the correct network interface automatically,

but sometimes you need to specify it explicitly.

For example, with PyTorch and the NCCL backend, you may need

to add these commands to tell NCCL to use the private interface:

```yaml

commands:

- apt-get install -y iproute2

- >

if [[ $DSTACK_NODE_RANK == 0 ]]; then

export NCCL_SOCKET_IFNAME=$(ip -4 -o addr show | fgrep $DSTACK_MASTER_NODE_IP | awk '{print $2}')

else

export NCCL_SOCKET_IFNAME=$(ip route get $DSTACK_MASTER_NODE_IP | sed -E 's/.*?dev (\S+) .*/\1/;t;d')

fi

# ... The rest of the commands

```

??? info "SSH"

You can log in to any node from any node via SSH on port 10022 using the `~/.ssh/dstack_job` private key.

For convenience, `~/.ssh/config` is preconfigured with these options, so a simple `ssh

```yaml

type: task

name: trl-sft

python: 3.12

env:

- MODEL=Qwen/Qwen2.5-0.5B

- DATASET=stanfordnlp/imdb

commands:

- uv pip install trl

- |

trl sft \

--model_name_or_path $MODEL --dataset_name $DATASET

--num_processes $DSTACK_GPUS_PER_NODE

resources:

# 16 or more x86_64 cores

cpu: 16..

# 200GB or more RAM

memory: 200GB..

# 4 GPUs from 40GB to 80GB

gpu: 40GB..80GB:4

# Shared memory (required by multi-gpu)

shm_size: 24GB

# Disk size

disk: 500GB

```

The `cpu` property lets you set the architecture (`x86` or `arm`) and core count — e.g., `x86:16` (16 x86 cores), `arm:8..` (at least 8 ARM cores).

If not set, `dstack` infers it from the GPU or defaults to `x86`.

The `gpu` property lets you specify vendor, model, memory, and count — e.g., `nvidia` (one NVIDIA GPU), `A100` (one A100), `A10G,A100` (either), `A100:80GB` (one 80GB A100), `A100:2` (two A100), `24GB..40GB:2` (two GPUs with 24–40GB), `A100:40GB:2` (two 40GB A100s).

If vendor is omitted, `dstack` infers it from the model or defaults to `nvidia`.

??? info "Shared memory"

If you are using parallel communicating processes (e.g., dataloaders in PyTorch), you may need to configure

`shm_size`, e.g. set it to `24GB`.

> If you’re unsure which offers (hardware configurations) are available from the configured backends, use the

> [`dstack offer`](../reference/cli/dstack/offer.md#list-gpu-offers) command to list them.

### Docker

#### Default image

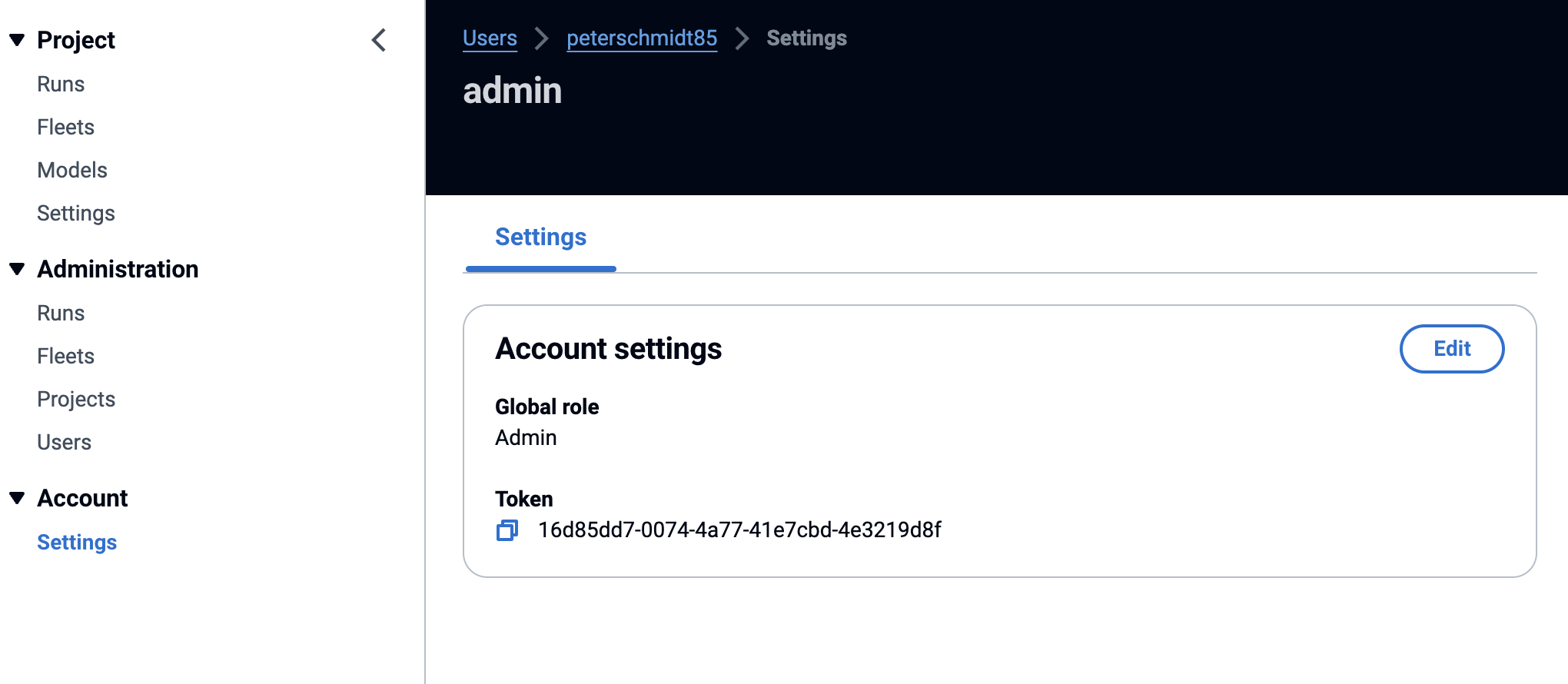

If you don't specify `image`, `dstack` uses its [base :material-arrow-top-right-thin:{ .external }](https://github.com/dstackai/dstack/tree/master/docker/base){:target="_blank"} Docker image pre-configured with