Supporting AMD accelerators on RunPod¶

While dstack helps streamline the orchestration of containers for AI, its primary goal is to offer vendor independence

and portability, ensuring compatibility across different hardware and cloud providers.

Inspired by the recent MI300X benchmarks, we are pleased to announce that RunPod is the first cloud provider to offer

AMD GPUs through dstack, with support for other cloud providers and on-prem servers to follow.

Specification¶

For the reference, below is a comparison of the MI300X and H100 SXM specs, incl. the prices offered by RunPod.

| MI300X | H100X SXM | |

|---|---|---|

| On-demand pricing | $3.99/hr | $3.99/hr |

| VRAM | 192 GB | 80GB |

| Memory bandwidth | 5.3 TB/s | 3.4TB/s |

| FP16 | 2,610 TFLOPs | 1,979 TFLOPs |

| FP8 | 5,220 TFLOPs | 3,958 TFLOPs |

One of the main advantages of the MI300X is its VRAM. For example, with the H100 SXM, you wouldn't be able to fit the FP16

version of Llama 3.1 405B into a single node with 8 GPUs—you'd have to use FP8 instead. However, with the MI300X, you

can fit FP16 into a single node with 8 GPUs, and for FP8, you'd only need 4 GPUs.

With the latest update,

you can now specify an AMD GPU under resources. Below are a few examples.

Configuration¶

Here's an example of a service that deploys Llama 3.1 70B in FP16 using TGI.

type: service

name: amd-service-tgi

image: ghcr.io/huggingface/text-generation-inference:sha-a379d55-rocm

env:

- HF_TOKEN

- MODEL_ID=meta-llama/Meta-Llama-3.1-70B-Instruct

- TRUST_REMOTE_CODE=true

- ROCM_USE_FLASH_ATTN_V2_TRITON=true

commands:

- text-generation-launcher --port 8000

port: 8000

# Register the model

model: meta-llama/Meta-Llama-3.1-70B-Instruct

# Uncomment to leverage spot instances

#spot_policy: auto

resources:

gpu: MI300X

disk: 150GB

Here's an example of a dev environment using TGI's Docker image:

type: dev-environment

name: amd-dev-tgi

image: ghcr.io/huggingface/text-generation-inference:sha-a379d55-rocm

env:

- HF_TOKEN

- ROCM_USE_FLASH_ATTN_V2_TRITON=true

ide: vscode

# Uncomment to leverage spot instances

#spot_policy: auto

resources:

gpu: MI300X

disk: 150GB

Docker image

Please note that if you want to use AMD, specifying image is currently required. This must be an image that includes

ROCm drivers.

To request multiple GPUs, specify the quantity after the GPU name, separated by a colon, e.g., MI300X:4.

Once the configuration is ready, run dstack apply -f <configuration file>, and dstack will automatically provision the

cloud resources and run the configuration.

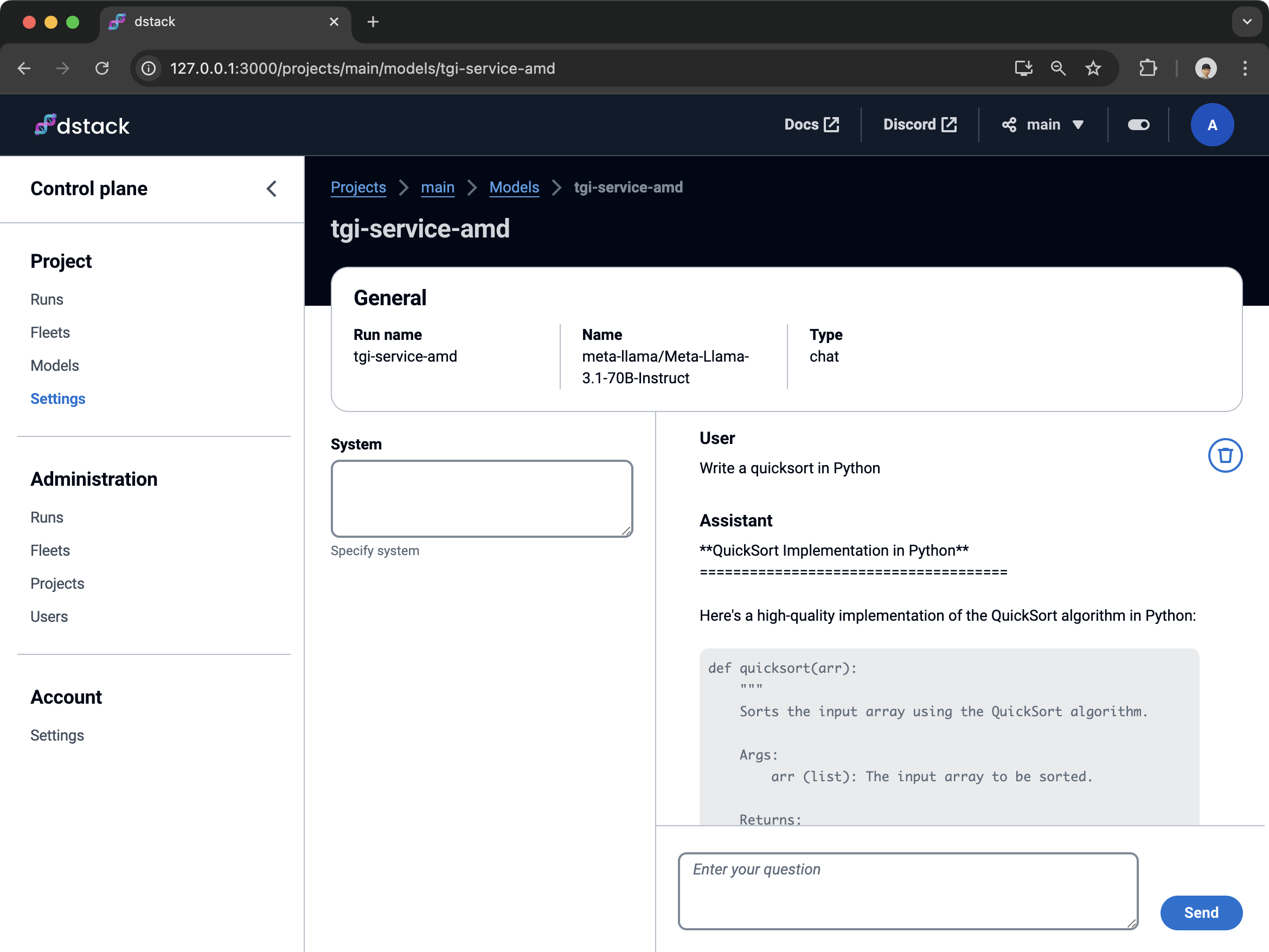

Control plane

If you specify model when running a service, dstack will automatically register the model on

an OpenAI-compatible endpoint and allow you to use it for chat via the control plane UI.

What's next?¶

- The examples above demonstrate the use of TGI. AMD accelerators can also be used with other frameworks like vLLM, Ollama, etc., and we'll be adding more examples soon.

- RunPod is the first cloud provider where dstack supports AMD. More cloud providers will be supported soon as well.

- Want to give RunPod and

dstacka try? Make sure you've signed up for RunPod, then set up thedstack server.

Have questioned or feedback? Join our Discord server.